Module 1: Terminology & Concepts

As long as we have used computers, there has been an interest in quantifying the risk. In the 1960s, computers were used for data processing, and the concern was information protection. Over time, a variety of mathematical and probabilistic methods were explored. Algorithms were tested, and models were developed. Thousands of white papers were written. Some approaches were relatively simple, others extremely complex. In 2006, the Factor Analysis of Information Risk (FAIR) method was introduced. Over time, it gained popularity, became recognized as an open standard, and has been referenced in several cybersecurity risk management frameworks. Inevitably, the threat landscape has evolved, and with it, the continued interest in methods and models for quantifying cybersecurity risk.

In its simplest form, Cybersecurity Risk Quantification, also called Cyber Risk Quantification (CRQ), involves converting risk uncertainty into numerical values so that leadership can more easily differentiate one risk from another and make better risk-informed decisions.

This book aims to inform the reader of today’s most commonly applied mathematical methods and review several useful models. In addition, we will cover two key activities foundational to your success in this work: vulnerability analysis and effectively communicating risk.

The Origins of Cybersecurity Risk Quantification

CRQ has a history of contradictions, and difficulties without widely adopted solutions.

In her paper “Measuring Risk: Computer Security Metrics, Automation, and Learning,” Rebecca Slaytor of Cornell University does an excellent job reviewing the debate over security risk assessments in the 1970s and 80s. She emphasizes that the most valuable part of risk management is what is learned from the process. The same can be said of quantifying cyber risk.

Some of the earliest papers on quantifying cyber security risk date back to the 1960s, when the Department of Defense sponsored studies on how to assure the security of military computer systems. A 1967 Defense Science board panel chaired by Willis Ware of RAND noted that “it is difficult to make a quantitative measurement of the security risk-level” of “security-controlling systems” and that this posed challenges for “policy decisions about security control in computer systems.” The report “Security Controls for Computer Systems: Report of the Defense Science Board Task Force on Computer Security,” Office of the Director of Defense Research and Eng., was published by The RAND Corporation in 1970 and reissued in October 1979.

In 1972, the US Department of Commerce National Bureau of Standards (NBS) and ACM cosponsored the workshop “Controlled Accessibility”. One of the five working groups at the workshop focused on measurements; it explained that metrics were needed. It proposed a new “data security engineering discipline” that would straddle hard and soft engineering areas and identified four specific areas for quantification: risk assessment, cost-effectiveness, secure-worthiness, and measures of system penetration.

RAND researchers Rein Turn and Norman Shapiro developed formal mathematical expressions for quantifying cyber risk, but their efforts were not very useful because of the lack of data. At the 1972 workshop, Turn was one member of the Measurements Working Group, which felt that risk analysis was “perhaps the most advanced” area of metrics. Drawing upon the work of IBM computer engineer and group member Robert Courtney, they proposed estimating the probability and negative financial impact of six risks: destroying, disclosing, or modifying data, each of which could happen intentionally or unintentionally. This would allow risk assessors to calculate the Annual Loss Expectancy (ALE).

ALE is an estimated annual financial loss based on Annualized Rate of Occurrence (ARO) and Single Loss expectancy (SLE). ARO is how often incidents occur within 1 year. SLE is the potential financial loss from a single incident. ALE is calculated as ALE = ARO x SLE.

Risk analysis turned out to be much more difficult, as became clear when it became the cornerstone of efforts to secure federal data processing facilities after the 1974 Privacy Act. NBS issued guidelines, as did the Office of Management and Budget (OMB), for all agencies to analyze risk. Although the guidelines emphasized the importance of risk analysis, they had little to say about how to actually conduct the study. The 1979 guidelines classified risks into confidentiality, integrity, and availability. It was expected that “a combination of historical data…knowledge of the system, and … experience and judgment” be used to make rough estimates of the likely frequency and impact of adverse events on application systems. Risk analysis became a process of accounting for organizational assets and estimating the likelihood and cost of something terrible happening. It is worth noting that the focus was on the order of magnitude estimates to avoid getting caught up in the debate over precise values (National Bureau of Standards, “Guidelines for Automatic Data Processing Risk Analysis; Federal Information Processing Standards Publication 65,” Government Printing Office, 1979. And R.H. Courtney, “Security Risk Assessment in Electronic Data Processing Systems,” Proc. AFIPS National Computer Conf., 1977, p. 97.)

In the 1970s, Lance Hoffman and Don Clement, in their paper “SECURATE – Security evaluation and analysis using fuzzy metrics,” developed a model that leveraged “fuzzy” logic and qualitative labels like “very high,” “high,” and “low” to quantify risk. The model’s value was in using “intuitive linguistic variables” for range variables. The process was valuable in its ability to structure the thinking around computer security. The value of what participants learned was often overlooked.

To paraphrase Rebecca Slayton, “Regulators value the output of risk analysis as a means of administrative control and improving efficiency. Practitioners derive value from the learning gained in the process of measuring risk. Automated methods appeal to those who want to avoid learning about their own vulnerabilities. A simple, order-of-magnitude (initial analysis) method based on existing vulnerabilities is a viable alternative.“

Three Modern CRQ Frameworks

The Operationally Critical Threat, Asset, and Vulnerability Evaluation (OCTAVE) was developed in 2001 at Carnegie Mellon University (CMU) for the US Department of Defense. OCTAVE has three phases: building asset-based threat profiles, identifying infrastructure vulnerabilities, and developing security strategies. OCTAVE is focused on operational risk and security practices.

In 2006, Jack Jones published “An Introduction to Factor Analysis of Information Risk.” a modern-day Value-at-Risk model for quantifying cyber risk in financial terms. FAIR ™ uses risk factoring to decompose information risk into fundamental parts and presents a taxonomy describing how each risk factor combines to drive risk. It describes vulnerability as “a weakness that may be exploited” and risk as “the probable frequency and probable magnitude of future loss.”

In 2020, Facilitated Risk Analysis Process (FRAP) was published as a formal process designed to enable a fast and simple risk analysis. It includes a brainstorming session to list threats, the assignment of simple probability estimates using labels of High/Medium/Low, the assignment of simple impact High/Medium/Low, the identification of applicable controls, and a management summary. It encapsulates the basic risk analysis process and focuses on developing an initial analysis.

The Role of Risk Quantification in Risk Management

A Brief History of Risk Quantification in Risk Management Practices

In the early 1970s, the National Bureau of Standards (NBS) recognized the need to measure and analyze risk to information systems. (See NIST 2020/2022 Cybersecurity History – Risk Management)

Published Oct 2001, the ISO 17799 is about risk management, identifying assets, threats, and vulnerabilities, and quantifying the risk. Controls can then be used to avoid, transfer, or reduce the risk to acceptable levels. In ISO 17799, harm is calculated (quantified numerically) to reflect a successful exploit. Risk is calculated as probability x harm = risk. Probabilities of events are measured in frequency (over days, weeks, and months). Then, each combination of threat and vulnerability is calculated.

ISO/IEC 27005 published 2008 states, “Quantitative risk analysis uses a scale with numerical values (rather than the descriptive scales used in qualitative risk analysis) for both consequences and likelihood, using data from a variety of sources. The quality of the analysis depends on the accuracy and completeness of the numerical values and the validity of the models used. Quantitative risk analysis, in most cases, uses historical incident data, providing the advantage that it can be related directly to the organization’s information security objectives and concerns. One disadvantage is the lack of such data on new risks or information security weaknesses. A disadvantage of the quantitative approach can occur where factual, auditable data is unavailable, thus creating an illusion of worth and accuracy of the risk assessment.”

ISO Guide 73:2009 “Risk Management Vocabulary.” provides a basic vocabulary of risk management concepts.

- Risk is often expressed as a combination of an event’s consequences and the associated likelihood of occurrence. It is often related to objectives such as financial, safety, and environmental goals. Risk can apply at different levels of the organization, including strategic, organizational-wide, project, product, and process. It can be described by four elements: source, events, cause, and consequences.

- Exposure is the extent to which an organization is subject to an event. Probability is measured as a range from 0 to 1.

- Frequency is the number of events or outcomes in a period of time.

The National Institute of Science and Technology (NIST) Special Publication 800-39, “Managing Information Security Risk: Organization, Mission, and Information System View” defines risk as a measure of the extent to which an entity is threatened by a potential circumstance or even, and a function of (a) the adverse impacts that would arise due to this event and (b) the likelihood of occurrence of the events.

Measuring Risk in The Risk Management Process

NIST Publication Cyber Security Metrics and Measures (2009) provides a good foundation for the subject of measuring risk. To begin with, it clarifies the difference between metrics and measures. Metrics are tools to support decision-making. Measures are quantifiable, observable, and objective data that support metrics. Together, measures and metrics are used to (1) verify security controls are in compliance with policies and procedures, (2) identify security strengths and weaknesses, and (3) identify trends. The accuracy of a metric is dependent upon the accuracy of the supporting measures.

An organization could set a benchmark (goal) and then measure performance against this benchmark. For example, 80% of systems comply with the policy. Metrics and supporting measures are then selected to measure status against this benchmark. Organizations benefit when measures can be automated, and there is existing data sources and collection mechanisms. Measures can be analyzed in a variety of ways, including categorized and grouped, then compared.

Measures and metrics have meaning when they are contextualized. For example, tracking a metric to see whether it is rising or falling puts it into context.

Within the NIST special publication 800-30 Guidance for Conducting Risk Assessments17 we find guidance on where risk analysis integrates into the risk management process.

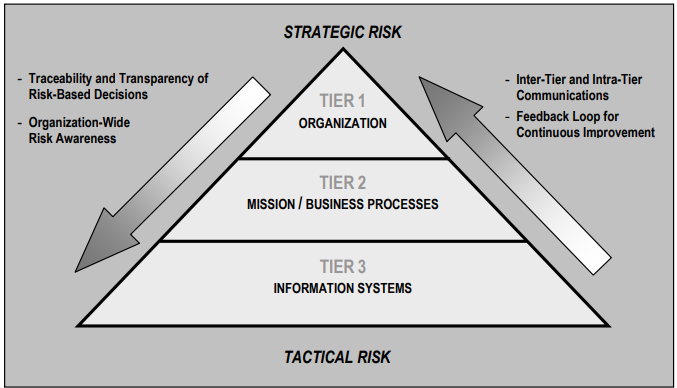

Figure 1 Risk Management Hierarchy – NIST 800-30

Risk management and risk analysis are applicable at all organizational levels. The methods selected and applied depend on the level of the organization, its purpose, and the data available.

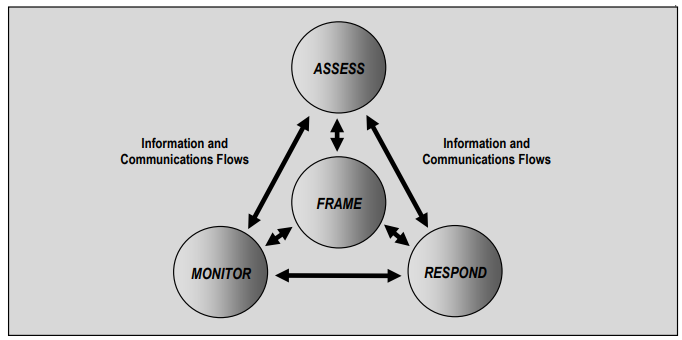

Figure 2 Risk Assessment Within the Risk Management Process – NIST 800-30

The first step of risk management is determining how to frame risk or establish context. This establishes the strategy for assessing, responding to, and monitoring the risk.

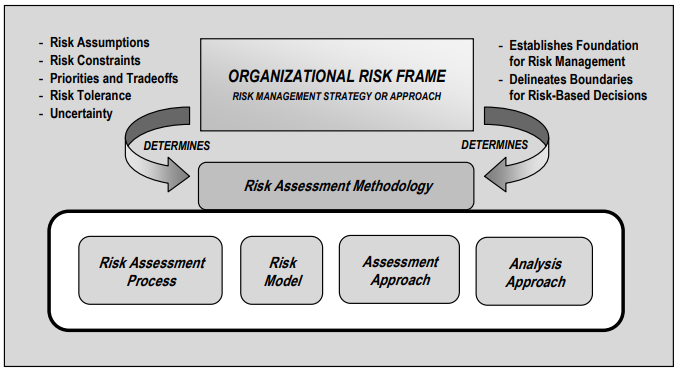

Figure 3 Relationship Among Risk Framing Components – NIST 800-30

Organizations can use a single assessment method, or multiple methods based on the need and available data.

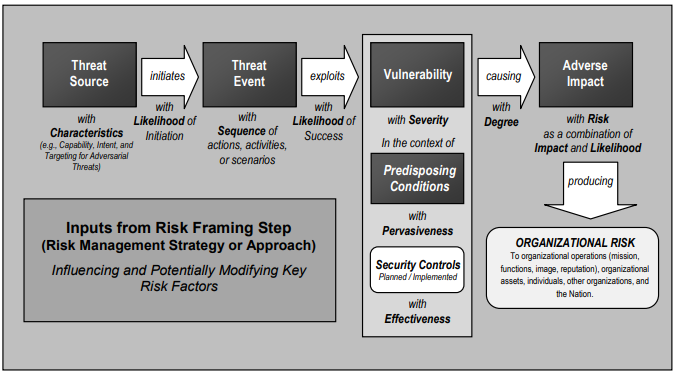

Figure 4 Generic Risk Model With Key Risk Factors – NIST SP 800-30

The Australian and New Zealand standard AS/NZS ISO 31000:2009 “Risk Management – Principles and guidelines” (2009) states that all organizations manage risk by identifying it, analyzing it, and then applying risk treatment to meet their risk criteria (appetite). Risk management is applicable to the entire organization at various levels, specific functions, projects, and activities. The AS/NZ ISO 31000:2009 is the Australian version of the International Standard ISO 31000 “Risk Management – Principles and guidelines”.

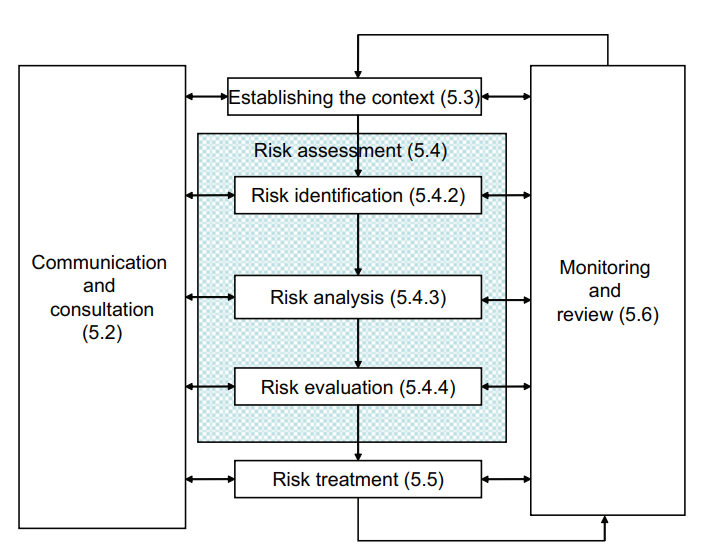

Figure 5 ISO 31000 Risk Management Process

When a standard framework of risk management is adopted, organizational benefits include:

- Increased likelihood of achieving objectives.

- Improved identification of threats.

- Improved governance and stakeholder confidence and trust.

- A reliable basis for decision-making and planning.

- Effective allocation of resources.

- Improved operational effectiveness and efficiency.

- Improved loss prevention and incident management.

- Losses are minimized.

- Organizational resilience is increased.

- Effective management of risk.

Risk analysis provides the basis for decisions about risk treatment. It includes risk estimation. Analysis involves considering the cause, consequences, and likelihood of event occurrence. It can be qualitative, semi-quantitative, quantitative, or some combination of the three. Depending on the purpose and available data, analysis can be high-level or detailed. An initial analysis may lead to the decision to undertake further analysis.

Risk Quantification Roles and Skills

Risk quantification is a vital process that involves measuring and analyzing potential cybersecurity risks. It is a complex process that requires specialized skills and expertise. Every organization is different, but below are some typical roles and skills for quantifying cyber risk.

Common Risk Quantification Roles

- Risk Analysts are responsible for identifying and assessing potential risks to an organization’s operations, reputation, and financial stability. They use various tools and techniques to measure and analyze risks and develop strategies to manage them.

- Data Analysts collect, analyze, and interpret data to identify trends, patterns, and insights. They use statistical models and data visualization tools to identify potential risks and assess their potential impact.

- Risk Managers are responsible for overseeing an organization’s risk management program. They develop and implement risk management strategies and ensure that the organization complies with regulatory requirements and industry standards.

- Cybersecurity Experts are responsible for identifying and mitigating cybersecurity risks. They have expertise in cybersecurity tools and techniques and are responsible for implementing security controls and monitoring systems to detect potential threats.

Common Risk Quantification Skills

- Quantitative Analysis is a crucial skill required for risk quantification. It uses statistical models and data analysis techniques to measure and analyze potential risks. This skill requires expertise in statistical analysis and data visualization tools.

- Risk Assessment is a critical skill required for risk quantification. It involves identifying potential risks and assessing their potential impact on an organization’s operations, reputation, and financial stability. This skill requires expertise in risk management frameworks and methodologies.

- Business Acumen is a key skill required for risk quantification. It involves understanding an organization’s business model, operations, and financials. This skill is essential for developing risk management strategies tailored to an organization’s specific needs and risk profile.